My Experience and Thoughts on Backups

Note: This post was originally posted on my personal blog. I have copied the content to this blog.

I have two main ways of storing data in my environment. First, there’s Longhorn, which stores the data locally on the workers which is then replicated and served across the network node-to-node communication. And then I have an Asustor AS6510T which provides access to its storage via NFS, SMB, AFP etc.

Longhorn backups are pretty simple, you point Longhorn to an S3 bucket and it backs up the volumes at the block level. Updates to the backups are incremental by only uploading blocks that have changed since the last backup. Restores are fairly simple, although they require downtime. To restore, you simply delete the local volume and select the backup as a source to recreate the volume. Longhorn recreates the PVC and populates it with the data from the selected backup. Once it’s in place, you can spin your workload back up. This is simple, but it doesn’t allow for manipulating or restoring individual files, so a restore basically restores the entire volume to an earlier state in time. I configured these backups, have restored from them before and generally had no issue with them.

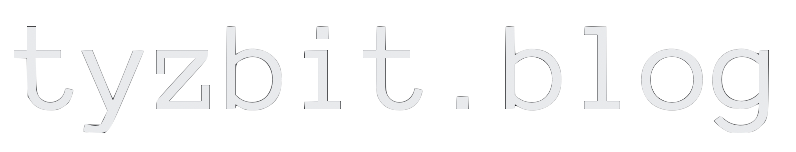

The Asustor has 2 volumes, one on SSDs and one on HDDs. Both take local snapshots every 6 hours. Restoring data from these snapshots is simple, the snapshot itself can be opened like a folder by selecting “Preview” and files can be copied to/from the snapshot using the web interface. Snapshots are not backups but they are handy for restoring previous data, and being able to do it down to the file/folder level is great.

The Asustor can also do external backups via rsync, but this proved insufficient for my needs. And thus begins my tale of how to back up the data on my Asustor NAS.

Duplicati on the NAS

I originally opted to use Duplicati, which was available as an app on the Asustor NAS. I chose this because ideally it meant the only network traffic was egress to the storage backend from the NAS, which should have increased efficiency. Long story short, this did not work. I had issues with the Duplicati database and the backup ran at 5MB/s. It would take over a month to back up my data at that rate, but in theory further backups would be incremental. Duplicati itself seemed okay, so I then tried running Duplicati as a workload on my k8s cluster.

Duplicati on k8s

Setting up duplicati was easy, there was a k8s-at-home helm chart for it. The speeds were better, around 10-15MB/s, but even though it was relatively stable, being able to run a full backup for 12 days straight still proved to be impossible. This was compounded by an ongoing issue I have with the Asustor NAS, which I might write another post on. The long and short of it though was Duplicati’s database methodology was just not able to handle the instability, which really isn’t totally Duplicati’s fault. Overall I feel Duplicati is well suited to backing up single workstations, but is insufficient for backing up large amounts of data.

Duplicacy on k8s

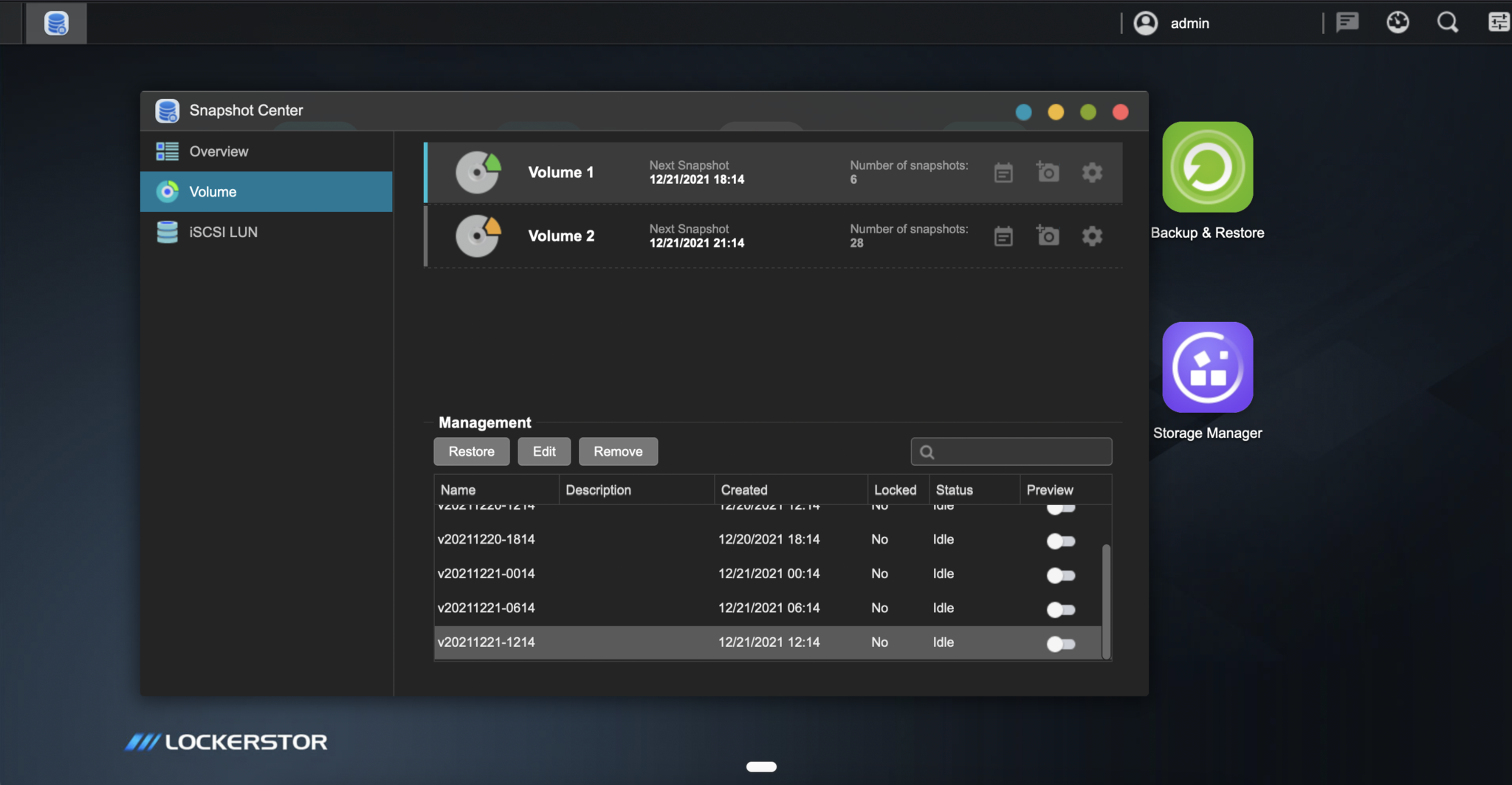

I had purchased a license for ($20/yr for the first year, $5/yr afterwards) and had used Duplicacy previously (I stopped using it in favor of trying Duplicati, which of course eventually was a dead end). Setting it up was a breeze and this time instead of the flaky Backblaze backend I decided to go with Amazon S3. S3 is pretty costly but I was missing files when I restored from Backblaze so I decided to go with the most reliable storage. Choosing S3 was also a boon for speeds, I regularly back up at 50MB/s which is less than 4 days to do a full backup of my media. Plus, failures were handled more gracefully; Duplicati uses a local database to keep track of files so if the database gets corrupted it has to get recalculated from the backend–something that’s not possible if you don’t have a full backup there already. Duplicacy on the other hand doesn’t have a database, it organizes files and names them according to the hash of the content they store, so all Duplicacy has to do is read some data, hash it and if the hash already exists in the backend, it can just reference that piece rather than reuploading it. This also greatly aids deduplication. More info can be found on the features section of the Duplicacy GItHub repo.

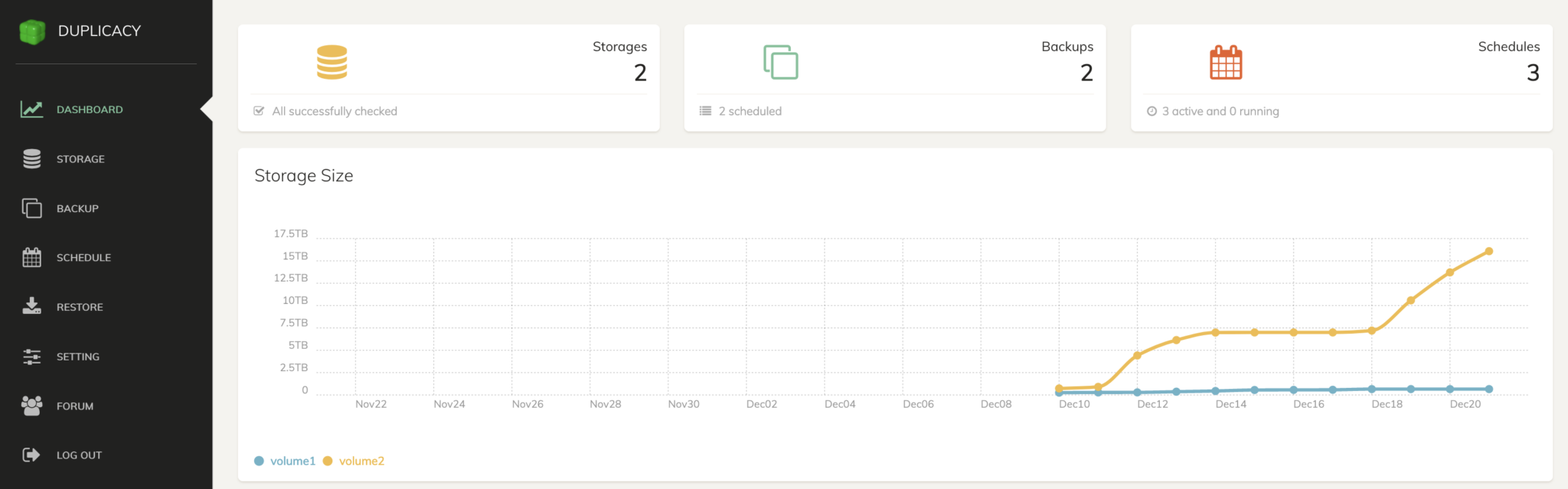

Restoring data from Duplicacy is easy to navigate and it allows for restoring individual files from a point in time, like the snapshots Asustor takes. It doesn’t preserve permissions though, which is a bummer. It also is a little nonintuitive in that when you restore a file or folder, it restores the full path to the file to the directory you select (so if a file was /volume1/media/big.buck.bunny.mp4 and you restored it to /volume1/media, it would be restored to /volume1/media/media/big.buck.bunny.mp4.

The negatives of my configuration of Duplicacy are that it requires my k8s cluster to be working (as opposed to only requiring the NAS to be working). I’d love to say I have perfect uptime, but reality isn’t so.

I also use healthchecks.io to monitor the backup jobs and send me an email if they fail or if the job doesn’t run for any reason. Final Thoughts

I think I’ve finally found a sweet spot for backups, and I am slowly building confidence in my environment and my backups. There’s definitely room for improvement (and this configuration isn’t completely free) but it’s worth it if I can confidently say I can lose all of my local data and be able to restore from a point in the last 24 hours.