My Experience and Thoughts on Longhorn

Note: This post was originally posted on my personal blog. I have copied the content to this blog.

I touched on Longhorn in https://qtosw.com/2021/my-kubernetes-cluster-experience/ but it’s worth digging into further because the concept is really cool.

Longhorn works by having nodes with storage provide that storage as iSCSI devices or Kubernetes volumes. It’s similar to CephFS in this way from what I can tell from research, but where it differs is Longhorn operates purely on the block level, not on the filesystem level. While CephFS exposes the storage to other nodes via NFS, Longhorn does it via virtual block devices. As I mentioned in my previous post, this can complicate things because if you have an app with 100GB of storage assigned that writes 100GB and deletes 99GB, Longhorn still sees it as 100GB used since those blocks can’t be marked as free, since from an underlying storage perspective, Longhorn doesn’t know about filesystems. Longhorn does know a little about filesystems when it comes to expanding volumes, but that doesn’t have an effect on scheduling and allocating storage.

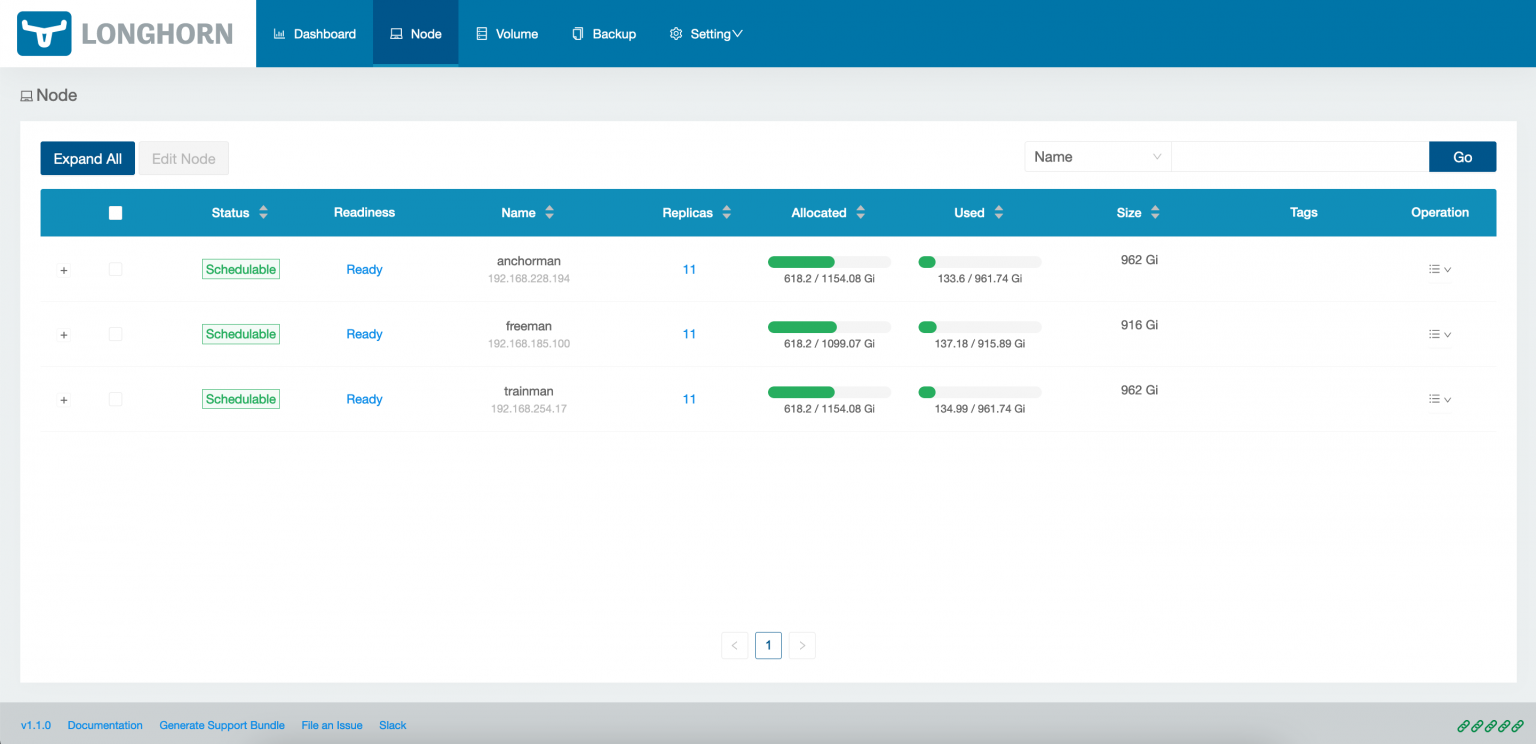

My three nodes with their 1TB of storage. Note that you can overprovision — by default Longhorn will let you overprovision by 200%.

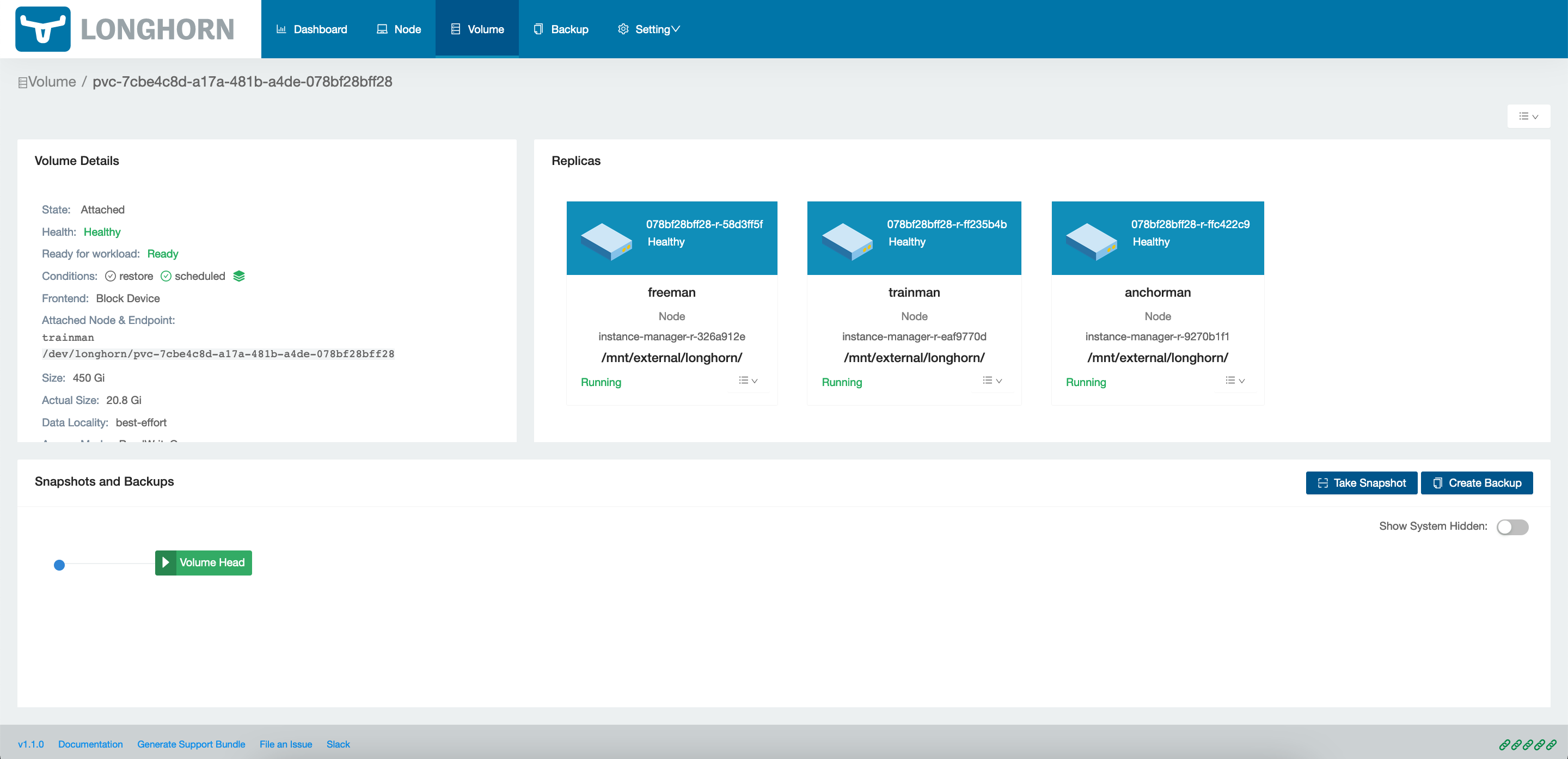

Ultimately, you designate nodes as Longhorn storage nodes. This can be done via labels and annotations, and you can configure default storage locations for the nodes so Longhorn can immediately start using them. By default, Longhorn will try to maintain 3 replicas of any given volume; because I only have 3 storage nodes that means that all nodes will have all replicas of all volumes.

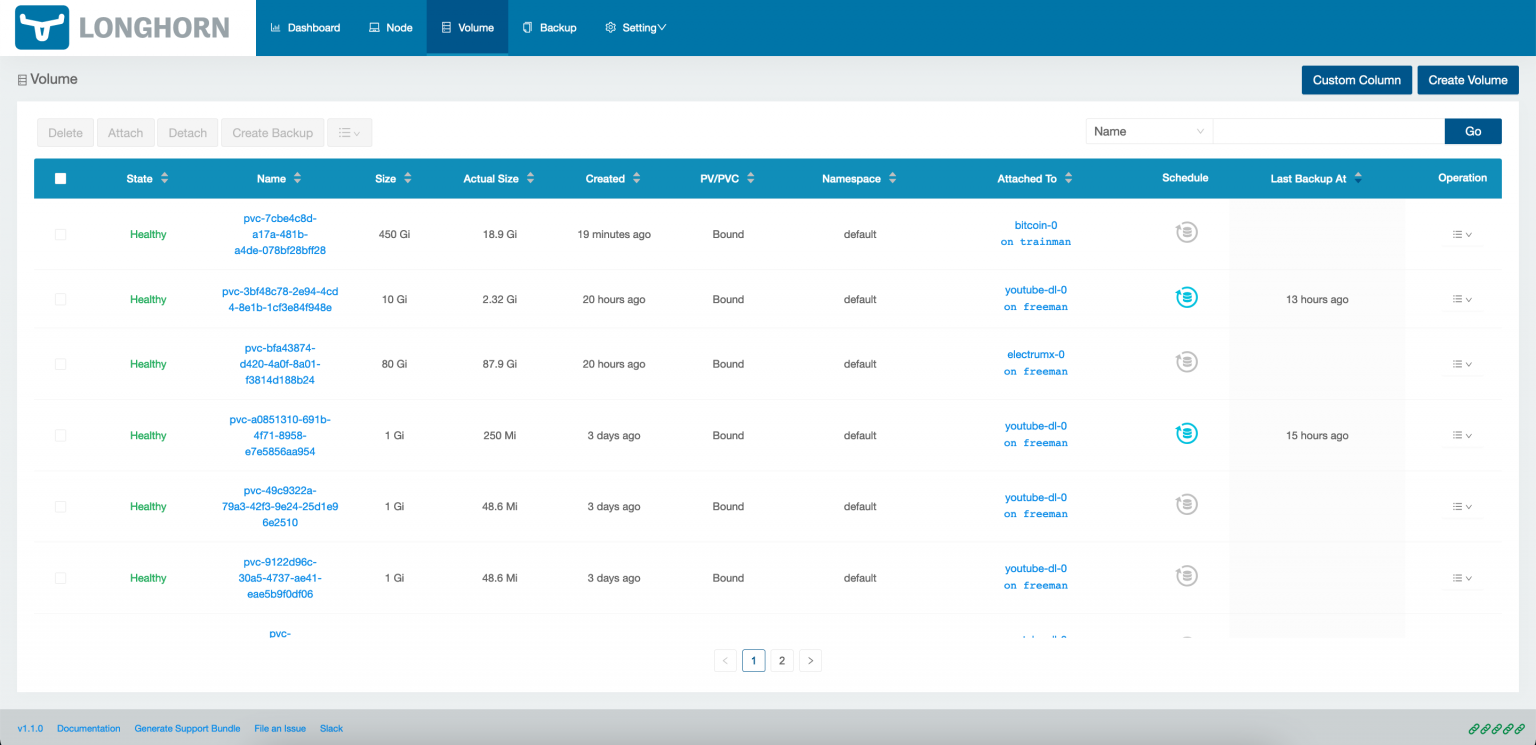

My Longhorn volumes

An example Longhorn volume (The bitcoin Blockchain, which I’m resynchronizing after recreating the volume to be 100GB smaller.)

Behind the scenes, Longhorn actually manages each node from two pods, with names that have e and r in them. I don’t know what they do, but those are spawned from longhorn-manager and are a major part of having an operational longhorn node.

According to Longhorn’s documentation:

Longhorn creates a dedicated storage controller for each volume and synchronously replicates the volume across multiple replicas stored on multiple nodes.

This means that volumes are isolated from each other in a way — if one volume’s storage controller fails, it should only affect that specific replica of that volume.

Longhorn has good documentation, and recently entered General Availability as of June 2020.

What I’ve learned after managing Longhorn is to keep in mind that it works on the block device level at all times. This matters when allocating storage but also when rebuilding – when Longhorn replicas go offline they have to rebuild from the latest version they have and that might mean hours of rebuilding for multi-hundred GB volumes because even if the total change was just to one file, many blocks may have changed.

Because every node is a Longhorn replica in my cluster, I don’t get to see much of this, but Longhorn by default doesn’t care too much about data locality, similar to how cluster NFS is. As long as one replica has the volume mounted, it can be ReadWriteMany for any node in the cluster to use.

One downside to Longhorn is it apparently has a pretty high write performance hit (probably why they recommend against using it with HDDs). For example, an rsync from a server with an NVMe drive over a gigabit connection to another server with a Longhorn volume is only going 20MB/s, and I rarely see higher than that. I suspect this is because writes have to be replicated to the other replicas, which might even be synchronous.

Longhorn has snapshotting and backup functionality which seem reliable enough. Again, block device, so block level snapshots and backups. It supports NFS or S3 storage for backups, however it appears it doesn’t support NFSv3, only NFSv4.

Longhorn has proven to be a little fragile though. Longhorn assumes fast drives and constant connectivity and those aren’t guaranteed in my environment. When disconnected, Longhorn will try to recover and rebuild but it often doesn’t succeed automatically.

Longhorn does have the concept of DR clusters which I haven’t played with, but that seems like it would help address the fragility of the system without relying on the same infrastructure to restore from backup.

Expanding drives might be possible without downtime but I’ve learned to just spin things down, expand the PVC via kubernetes (which Longhorn detects and responds to automatically), and then spin my workload back up.

Longhorn does not automatically recover from a node going offline though, at least not quickly enough that you can call it transparent. If a node goes offline, you need to scale your workload down and back up so the storage can be reattached to a different node. Maybe this will be improved in the future.

So, final thoughts? Longhorn fills a niche that I needed which was the ability to move stateful workloads to any node at a moment’s notice (though not transparently). If stability and uptime are absolutely essential, maybe look into other more mature technologies. I however am beginning to trust its stability, especially since I’m not doing maintenance on my physical servers as much now.