Trying Out Kairos

Currently, my Kubernetes cluster is running on Ubuntu 20.04 and my upgrade path to the latest LTS 22.04 is dicey. So I figured it's time to look into immutable OSes. There are a few to pick from, Talos, K3OS, Kairos are popular ones. Talos seems to be the most popular, and K3OS is used pretty often (though it seems the momentum in the project has slowed). Oddly, Kairos doesn't have much written or discussed about it, despite being at least a couple years old. I decided to try out Kairos with no commitment to switch over to it for my primary infrastructure. I will recount my experience chronologically, not organized as a how-to.

My repository I used for this testing is here: https://github.com/tyzbit/kairos-config.

Why not Talos?

I anticipate this will be the first question, and it's one that should be asked. To be honest, I haven't done much academic research between Talos and Kairos - most of my experience with Talos is seeing people talk about issues with it on social media. But there are two main reasons why I wanted to use Kairos:

- No Longhorn support. This is actually being added right now in this issue. So if Kairos doesn't work out, maybe by the time I'm done testing I can fall back to Talos.

- I (and some apps I run like Home Assistant) are not ready to run such a pared-down OS. Home Assistant supports Bluetooth adapters and I've had two plugged into different nodes in my cluster for a while but it requires specific setup such as using dbus-broker. I anticipate this and other issues will cause me some frustration.

To summarize my view, Talos seems to be very powerful and improves an organization's posture but for a homelab, that much divergence from a typical OS seems like it would slow me down and I can just imagine doing something "the right way / the long way" and thinking "I could have done this in a one-liner with a system where I control the base".

And the most important bit

But wait!!! Kairos is immutable too!

Yes, but since the OS is a mostly off-the-shelf OS, I'll have first-rate package availability and more general compatibility with what I run. At least that's my assessment.

So, how did it go?

TL;DR: Still working on it

Day 1

From the get-go, I wanted to use PXE. I've always wanted to use it but have never successfully gotten it working. Kairos has a tool called AuroraBoot that I wanted to try. I set it up on my Synology NAS as a regular Docker container, running alongside my Ubiquiti container. It took quite a while to figure out that

- The config for AuroraBoot itself is just the first argument

- The parameters CANNOT be set via environment variables, so they must be arguments or in a config file

After I tried running it on my NAS, I ran into two issues - first, I'm running unifi and it's running on 8080 already. This meant I can't run this on my NAS, but I have a tiny thin client I can use to run modest Docker workloads but that leads me into my second issue that the auroraboot image and the OS image are over 1G and I ran out of space. I decided to take a break for the day but I'm considering using a thumb drive or something to store images.

Day 2

I continued to throw everything at the wall trying to see what stuck. Someone in the k8s@home Discord suggested adding an additional IP on the Synology, which was not possible but I did connect up another LAN port which gave it another IP inside to bind to... unfortunately something on the NAS immediately gobbled port 8080 on the new IP so it was still unusable.

I was able to confirm my router was sending the necessary TFTP info in the DHCP response but AuroraBoot is supposed to respond as well using ProxyDHCP (which I could find no info on for OPNSense such as allowing or blocking this). I even tried just running the binary on the thin client and the host I'm using for testing but I kept getting 403s from GitHub. I watched traffic for dropped packets and tried things local to the AuroraBoot box but nothing worked. As an aside, the documentation for AuroraBoot is good but the information on the repository is abysmal. There's a ton of config options and defaults that you can only get by reading the code.

Reading this in the documentation was also a slap in the face:

Generic hardware based netbooting is out of scope for this document.

So right now the problem seems to be that AuroraBoot is supposed to respond automatically to DHCP requests and "hook" the booting system into talking to it but it's not doing that. I tried everything to remove Docker from the equation or otherwise troubleshoot it not listening on port 67, but I couldn't find anything. My plan tomorrow is to keep troubleshooting and if all else fails, maybe just make a bootable thumbdrive. At that point though, I'll consider just jumping ship to Talos considering how I was unable to get a core feature meant to make things easier for me working after hours of fiddling.

Day 3

I found this support article about how to enable PXE boot so I made the changes it asked and now I'm getting the older Intel PXE boot screen, which I think is an improvement. If anything will work, it'll be the legacy stuff.

Day 4

Now that I was able to boot from the network, I tried the NAS again and it just worked! So now I don't need to run that thin client with no space.

Iterating on my config took a while for a few reasons:

- Documentation wasn't great

- The config file was on my NAS and I needed to restart the container to pick up the new config (which takes 5 minutes. If I pulled directly from GitHub, it could use the cache but I get 403 and I don't want to troubleshoot that part anymore)

- Changing container parameters on my NAS required deleting and recreating the container

- The web validator can't tell you if a value is wrong, only if it's formatted correctly for a cloud-config

- Giving it a config through the web UI that starts on the node seems to be overridden by the config in the PXE boot, so if it doesn't work you have to reboot.

Nevertheless I iterated through configs. My first goal was a single-node cluster with k3s and calico instead of flannel. Getting BGP to work with my router would be nice, but not something I'd troubleshoot until the very end

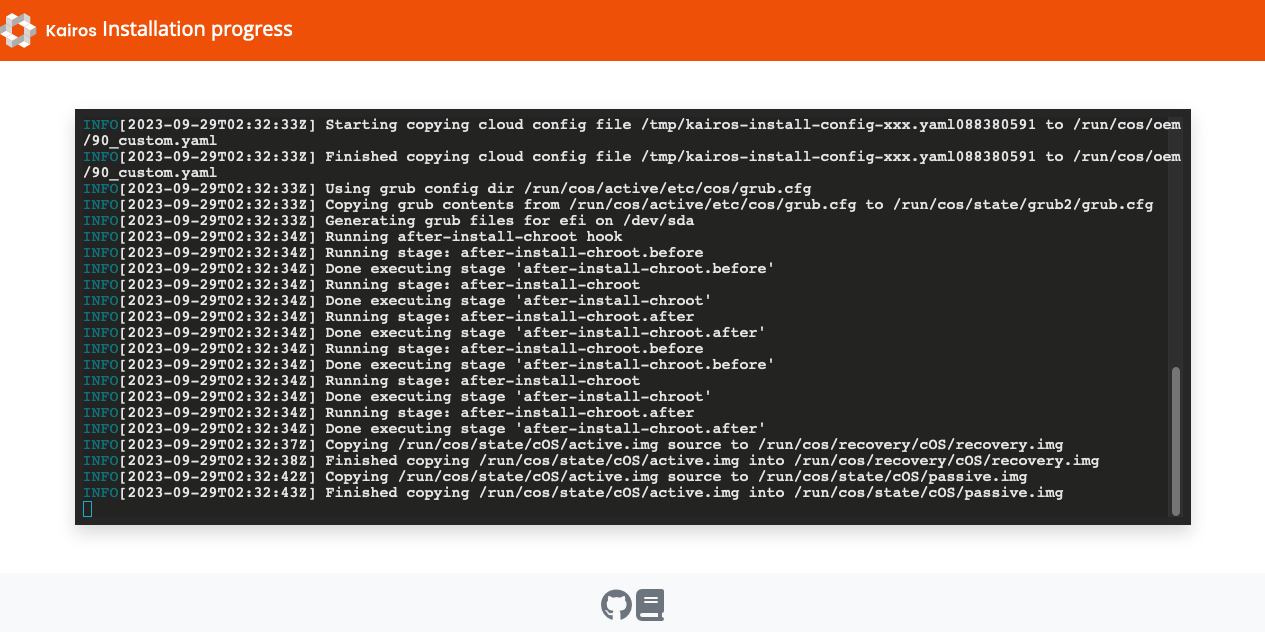

My first install, done manually (this is the web ui that the live boot OS serves on 8080)

I finally found a config that auto-installs! After reinstalling a few times, I got a working cluster!

So this has proven out a single-node cluster. Now to test various things from my main cluster to ensure they work on Kairos. Actually I wanna test multi-cluster stuff real quick, then reinstall as single-node. It was about this time that I realized it was 0145 and I called it for the day. Just kidding, I was up until 0230.

Timings in my environment:

- (re)start AuroraBoot on the NAS: 5-6 minutes (Pulling from quay.io, if you use the release stuff it only takes a minute or two)

- Install kairos: 4 minutes

- Boot kairos the first time: 3 minutes

Also it looks like Kairos reaches back out to AuroraBoot/pixiecore while running, so you may not be able to stop those services.

Day 5

I spent the first part of today troubleshooting automatically mounting a device and updating /etc/defaults/console-setup. Then I had some trouble with it pulling down my GitHub key to add to authorized_keys so I manually specified one. That didn't work so I had to cut out options to get back to a config that installed correctly and then slowly re-add options until I found the culprit. I finally put the server on a Wifi plug though so now I don't have to get it up to reboot it when I can't log in. I found out that when it works, the password I chose didn't work to log in (but I confirmed GitHub keys were working) so I tried a stronger one. Then I realized that when I removed and re-added the field, I changed it from passwd to password. Heh.

After I got a single node booting reliably and got all the initial stuff I wanted to get working, I started testing out k3s. I had never used it before, but it doesn't seem too bad with complicating things. I bootstrapped the cluster with Flux, which was a cinch and I threw some manifests from my other cluster in there to see how they deploy - all worked great first time (cert-manager, nginx ingress, a simple nginx app to test). I even got BGP working (although the IP pool is wrong on the cluster, I gotta fix that). At that point it was pretty late so I stopped there. I have plans this weekend so I don't know if I'll be able to pick this back up but it's looking very promising. I'm looking forward to pulling another node and trying to get a multi-node automatic p2p cluster going.

Day 6

I won't have as much time to dedicate to this today, so I'm prioritizing. First, I want to automatically reformat the NVMe stick, then I want to test if I can have the cluster bootstrap itself with Flux. One challenge seems to be the github token - it seems using --set with AuroraBoot doesn't let you set values that aren't part of the existing Config struct. I might PR the project and add a custom dictionary specifically for this.

I started just recording the screen because that appears to be the only way to see the output of the install. I was able to set up my user to be a sudoer and to natively be able to run kubectl commands. I think I got NVMe formatting right - if the disk is blank it will format it but otherwise it leaves it alone.

Day 7

I tried adding functionality for custom values in AuroraBoot but I found out the templating doesn't happen with AuroraBoot but with yip when it does its custom cloud-init, so I'll need to find a way to get the values there or maybe more simply, add that functionality to AuroraBoot.

I tried multi-node booting. Iterating is kinda hard because as far as I know, the logs aren't saved anywhere. The official troubleshooting suggests enabling a verbose debug mode but doesn't say explicitly how to persist the logs so they can be reviewed. It does mention about disabling immutability, maybe that would be enough.

Oct 02 04:52:47 hyades kairos-agent[1850]: {"level":"info","ts":1696222367.9297855,"caller":"service/node.go:357","msg":"not enough nodes available, sleeping... needed: 2, available: 0"}This is a weirdly exciting log line to see. I found out network_token can't just be whatever, it's tied into EdgeVPN (and can be generated with docker run -ti --rm quay.io/mudler/edgevpn -b -g) so I decided to just keep all that enabled and see what happens.

Day 8

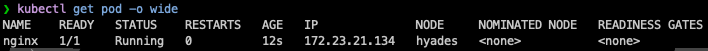

tyzbit@localhost:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

localhost-a96750bc Ready <none> 12s v1.27.3+k3s1

localhost Ready control-plane,master 62s v1.27.3+k3s1😌

I ran a little webserver on my NAS to host sensitive files and now I can bootstrap my cluster with SOPS secrets with Flux.

A lot is working such as the p2p stuff, but it bootstrapped 2 control plane nodes, which might be because I enabled HA now that I think about it. Another thing to test.

Working:

- Specifying the p2p token elsewhere so I can commit configs to a public repo

- decentralized p2p stuff, generally

- User stuff

- Auto install to the right disk

- Growing partitions

- Disabling kubevip

- All k3s options

kubectlout of the box

As a treat, here's a video of a host being bootstrapped:

Day 9

I worked on the Longhorn mounting, it still eludes me.

I figured out how to get AuroraBoot to serve other files:

netboot:

cmdline: >-

rd.live.overlay.overlayfs

rd.neednet=1

ip=dhcp

rd.cos.disable

netboot

nodepair.enable

console=tty1

console=ttyS0

console=tty0

extra-config={{ ID "/storage/config_extra.yaml" }}every option you add later will become other-X

❯ curl "http://192.168.1.59:8090/_/file?name=other-2"

#cloud-config

p2p:

network_token: ...The bad part is those commandline options become boot arguments which keep Kairos from booting.

Day 10

Today is (or should be) Flux day! I made my first attempt at a Flux bundle and after solving some DNS issues (caused by the EdgeVPN DNS server) I tried it out. I spent a lot of time on the bundle and then more time on fixing cluster connectivity issues.

Day 11

I got the Flux bundle working but it took a bit of iterating to get it to automatically try bootstrapping. Now it truly is hands-off, at least as much as possible. I plan on recording a video once I get my capture card to work again. Here's my config I committed today.

Here's my current TODO list:

- add templating for all values in config

- https://github.com/kairos-io/AuroraBoot/blob/109bdaaeec2327bf2439eb9656ce85c180ffeaa1/pkg/schema/config.go#L7

- Need to add template replacement in AuroraBoot or add to upstream yip

- plan out IPs for all nodes

- figure out how not to need built-in LAN / having 2 LAN adapters active:

- if it's necessary, set up a second switch specifically for it to keep 10G ports free.

- test upgrades

- trick Auroraboot into serving other files

- https://github.com/danderson/netboot/blob/64f6de6d0e3bf095f40a1b572a068dfecb7ba29a/pixiecore/http.go#L128

Day 12

I won't have a lot of time to dedicate today, but I did make a tweak to the Flux bundle.

Day 13

That's it for this post! I've got a cluster that provisions itself without touching it except to choose boot options and it sets up everything from External-DNS to Cert-Manager to even Longhorn. Look out for my next post with a howto!