unRAID and How I Do Things

Note: This post was originally posted on my personal blog. I have copied the content to this blog.

I recently started using unRAID for my server at home, and I figured I’d do a rough write-up of how I configured mine and some design decisions I made along the way.

What is unRAID?

For the uninitiated, unRAID is “a scalable, consumer-oriented, server operating system. Traditional approaches to personal computing technology place limits on your hardware’s capabilities, forcing you to choose between a desktop, media player, or a server. With unRAID, we can deliver all of these capabilities, at the same time, and on the same system.” What that means is unRAID is a platform from which you can store and serve files, and run applications to manage and stream those files for you (such as Plex or Kodi) using Docker or a fully virtualized machine with whatever specific applications you want installed.

How did I build my network?

Part of the perks of upgrading to unRAID on a new server (as opposed to running a physical server with all of the applications I needed installed in an ad-hoc manner) is that the previous server was freed up to do other things. Since my old server had two ethernet ports and was still more than capable, I converted it into running OPNSense, an open-source fork of PFSense, a network and security appliance. The network is pretty unexceptional from there, although I do connect to my VPN via OPNSense and route traffic to it, so any host on the main fabric can natively reach VPN resources

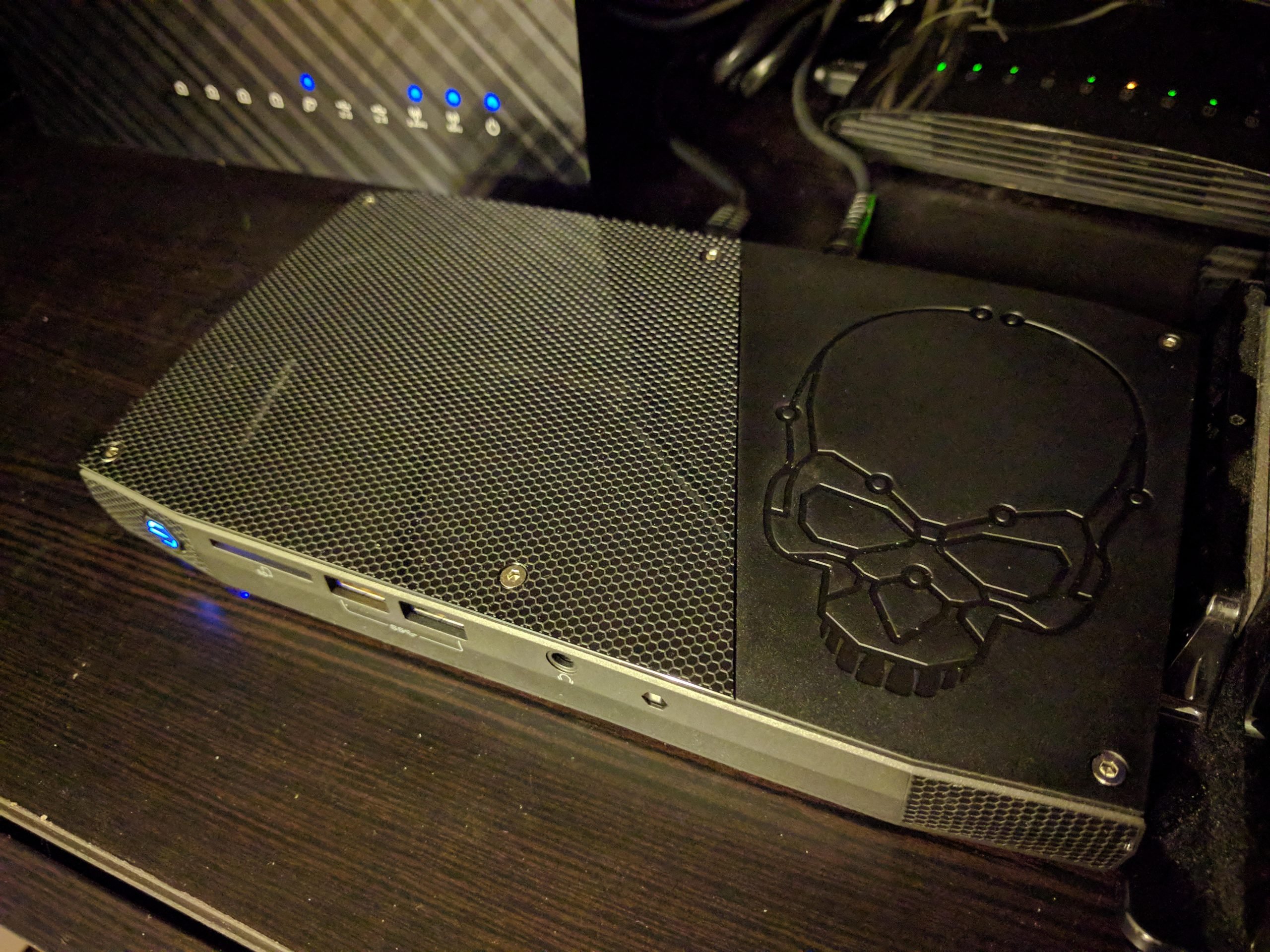

How did I build my system?

My system build is pretty simple, with some basic goals and ideas:

- All things equal, I want the lowest power consumption possible

- Again, all things equal, I want the lowest footprint possible

- The system must be capable of transcoding 4 streams at once

- The system must have about enough memory for at least two medium-sized VMs, and 10 Docker containers

- The system must have enough storage to accommodate running the VMs and Docker containers (but it does not need to store all of my files, since I already have a NAS)

I went with a Skull Canyon NUC with 32GB of DDR4 RAM and 2 1TB M.2 SSDs. I also used an off-the-shelf 32GB USB flash drive for unRAID’s OS (which is overkill, since it should really never use more than 1GB). The system stores no media files, only application configurations, ISOs for VMs, Docker volumes etc, and once fully spun up I’m at 25% RAM used and 33% storage used, which should allow me room to grow and an adequate amount of storage for logs. Also, the system being quad-core HT means it should have enough processing power to run all VMs, Docker containers, and burst transcode 4 streams with a little bit of overhead. Also, since I’m using DDR4 and M.2, the system’s bottleneck will probably always be CPU, as access times for application-related storage and RAM should be very low. The bottleneck to the NAS is more than acceptable since generally, a user will only need one file to be accessed and the difference waiting 5ms or 70ms for a single file to start streaming is imperceptible to the end-user. Batch operations are infrequent and can happen in the background.

What do I run on my system?

I run Plex, OwnCloud, Nginx and a number of supporting applications, nearly all containerized. Nginx serves as a convenient reverse proxy to add SSL support to all backend web-serving containers and to add authentication for every service. In addition, I have a few different monitoring/performance containers such as netdata, Zabbix and cAdvisor. Configuration volumes are mounted to the container from the unRAID shares, and storage mounts are mounted to the containers as volumes using the “Unassigned Devices” unRAID plugin. In addition, I run Splunk on a VM.

How do I monitor my system?

This is where most of the actual work took place in the migration, since my previous designs assumed a lot about the system providing critical services (such as the ability for Zabbix to see all processes for example, which is not the case for a Zabbix Docker container). I did some re-thinking about what my monitoring needed to look like and decided I really only needed to know:

- When services were unavailable

- When the system itself was unavailable

- When the system itself was misbehaving (high CPU, low/no RAM etc)

Since not all services provide an externally-facing HTTP endpoint (such as my custom plex-status container), I needed to be able to either monitor log output and alert on that, or monitor process listings (which Zabbix, as configured in Docker, cannot do natively). What I ended up configuring was HTTP endpoint checks from the Zabbix server to each service (over my VPN), and for the services that don’t expose HTTP endpoints, I hit the cAdvisor API (again over VPN) and search for the process in the process listing (since cAdvisor mounts the system’s /proc inside the container, it sees all processes). I’ve also begun using netdata for performance alerting, which duplicates some features of Zabbix but alerts directly to Discord instead of my default Zabbix action, which is email.

How do I handle logs?

Normally, unRAID catches Docker logs and exposes them in the web UI that you can watch in real-time. This is great for debugging containers, but once you put a design in place, how do you read logs long-term? My solution was to use Splunk, as Docker has built-in logging facilities to send logs to Splunk. In each of the “Extra Parameters” for my containers, I add

--log-driver=splunk --log-opt splunk-token=xxx --log-opt splunk-url=http://xxx:8088 --log-opt tag="{{.Name}};{{.FullID}};{{.ImageName}};{{.ImageFullID}}"

This ships the logs off to Splunk, where I can ingest them and search on them.

What challenges did I face and what pitfalls did I have to address/still have?

First off, the biggest challenge to the migration was simply time; since my previous server had gradually grown in responsibilities and apps running on it, each migration of those responsibilities took time, even if it was (best-case scenario) just 15-30 minutes to spin up a container and restore configs. I also spent a lot of time struggling with containerized Splunk (and eventually decided to run it on my VM), giving the Zabbix container access to the underlying system even though it was unaware it was running in a container (which I opted not to do in favor of the cAdvisor approach above), and other ultimately unfruitful paths/ideas.

I also have some deficiencies in design that I have to be cognizant of, or find a way to address in the future. For example, the Docker containers ship their logs off to Splunk, but Splunk itself is running in a VM; what happens when the containers come up before Splunk? I lose the logs. What happens when the containers can’t reach Splunk? Well, if I’m using a DNS name (which I am) and the name doesn’t properly resolve, it will prevent the containers from starting. Otherwise, if it gets an IP but it’s wrong or out of date, I also lose the logs.

Speaking of logs, many containers assume a logging level that is more verbose than expected. For example, cAdvisor logs a performance per each container(+VM) per second. When you have 15, that means 15 events per second, which (if you use the free 500MB/day Splunk, like I do) will cause you to go over your daily logging limit very quickly. That was an easy fix, though, it just required adding –housekeeping_interval=15s to the launch options of the container. On that note, adding container options (as opposed to Docker options) requires adding them to the end of the “Repository” field in unRAID, since the “Extra Parameters” field is for extra Docker parameters, not parameters to pass to the container.

Am I happy with the ultimate product?

Yes, it’s much easier to manage and upgrade, and it is less friction for future expansion. In addition, by becoming reliant on Docker, I can make changes or add features and share them with the rest of the Docker community.

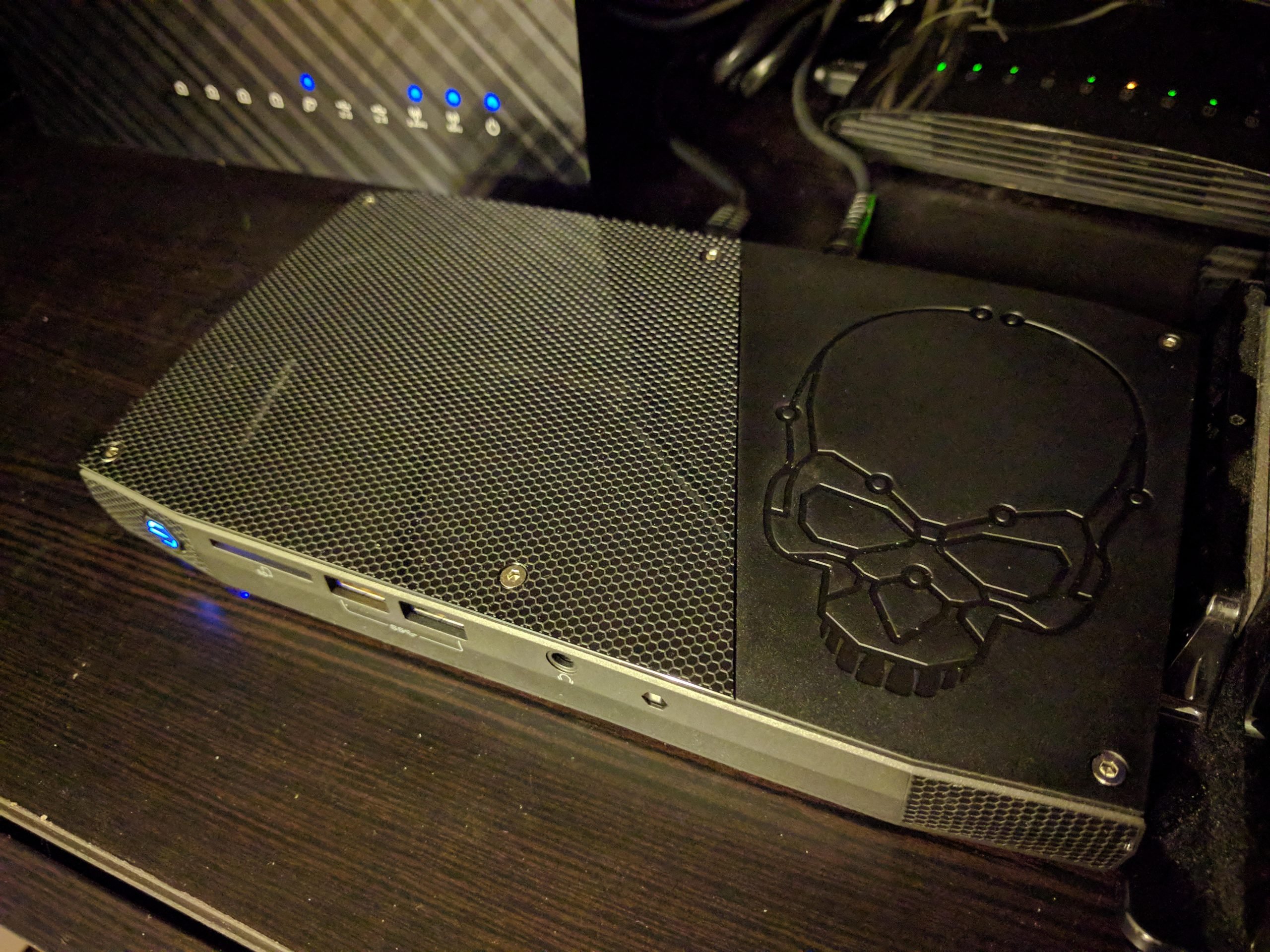

What does it look like?